This blog is intended as a home to some musings about M&E, the challenges that I face as an evaluator and the work that I do in the field of M&E.Often times what I post here is in response to a particularly thought-provoking conversation or piece of reading. This is my space to "Pause and Reflect".

Wednesday, June 29, 2011

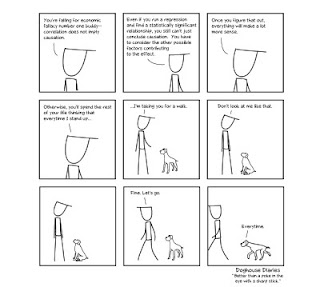

Weekly Funny: "A dog's brain is probably as effective...

as the most sophisticated statistical software on the market..." Says dog house diaries.

(Click for larger pic)

Close observations of "Spikkels" and "Trompie", my resident English Springer Spaniels, provide anecdotal evidence to support this theory. The Spaniels will have to try their tricks on the other "boss person" in our household for a few days. I'm off to East-Africa for a bit of work.

ANA Results - 2011

I have previously blogged about the implications of the Department of Basic Education's Annual National Assessments (ANAs) for educational evaluations. Yesterday, the grade 3, 6, and 9 results were released. The detailed report can be found on the FEDSAS website.

Some highlights from the Statement on the Release of the Annual National Assessments Results for 2011 by Mrs Angie Motshekga, Minister of Basic Education, Union Buildings: 28 June 2011

Read the full Statement and some reactions to this statement:

Statement by the Western Cape Education Department

News report by the Mail and Guardian Online

Statement by the largest teacher union SADTU

Statement by the official opposition

|

| Add caption |

“Towards a delivery-driven and quality education system”Thank you for coming to this media briefing on the results of the Annual National Assessments (ANA) for 2011. These tests were written in February 2011 in the context of our concerted efforts to deliver an improved quality of basic education.

It was our intention to release the results on 29 April 2011, at the start of the new financial year, so that we could give ourselves, provinces, districts and schools ample time to analyse them carefully and take remedial steps as and where necessary. Preparing for this was a mammoth task and there were inevitable delays.

Background

We have taken an unprecedented step in the history of South Africa to test, for the very first time, nearly 6 million children on their literacy and numeracy skills in tests that have been set nationally.

This is a huge undertaking but one that is absolutely necessary to ensure we can assess what needs to be done in order to ascertain that all our learners fulfil their academic and human potential.

ANA results for 2011 inform us of many things, but in particular, that the education sector at all levels needs to focus even more on its core business – quality learning and teaching.

We’re conscious of the formidable challenges facing us. The TIMMS and PIRLS international assessments over the past decade have pointed to difficulties with the quality of literacy and numeracy in our schools.

Our own systemic assessments in 2001 and 2004 have revealed low levels of literacy and numeracy in primary schools.

The Southern and Eastern African Consortium for Monitoring Education Quality (SACMEQ) results of 2007 have shown some improvements in reading since 2003, but not in maths.

This is worrying precisely because the critical skills of literacy and numeracy are fundamental to further education and achievement in the worlds of both education and work. Many of our learners lack proper foundations in literacy and numeracy and so they struggle to progress in the system and into post-school education and training.

This is unacceptable for a nation whose democratic promise included that of education and skills development, particularly in a global world that celebrates the knowledge society and places a premium on the ability to work skilfully with words, images and numbers.

Historically, as a country and an education system, we have relied on measuring the performance of learners at the end of schooling, after twelve years. This does not allow us to comprehend deeply enough what goes on lower down in the system on a year by year basis.

Purpose of ANA

Our purpose in conducting and reporting publicly on Annual National Assessments is to continuously measure, at the primary school level, the performance of individual learners and that of classes, schools, districts, provinces and of course, of the country as a whole.

We insist on making ANA results public so that parents, schools and communities can act positively on the information, well aware of areas deserving of attention in the education of their children. The ANA results of 2011 will be our benchmark.

We will analyse and use these results to identify areas of weakness that call for improvement with regard to what learners can do and what they cannot.

For example, where assessments indicate that learners battle with fractions, we must empower our teachers to teach fractions. When our assessments show that children do not read at the level they ought to do, then we need to revisit our reading strategies.

While the ANA results inform us about individual learner performance, they also inform us about how the sector as a whole is functioning.

Going forward, ANA results will enable us to measure the impact of specific programmes and interventions to improve literacy and numeracy.

Administration of ANA

The administration of the ANA was a massive intervention. We can appreciate the scale of it when we compare the matric process involving approximately 600 000 learners with that of the ANA, which has involved nearly 6 million.

There were administrative hiccups but we will correct the stumbling blocks and continue to improve its administration.

The administration of the ANA uncovered problems within specific districts not only in terms of gaps in human and material resources, but also in terms of the support offered to schools by district officials.

ANA results for 2011

Before conducting the ANA, we said we needed to have a clear picture of the health of our public education system – positive or negative – so that we can address the weaknesses that they uncover. This we can now provide.

The results for 2011 are as follows:In Grade 3, the national average performance in Literacy, stands at 35%. In Numeracy our learners are performing at an average of 28%. Provincial performance in these two areas is between 19% and 43%, the highest being the Western Cape, and the lowest being Mpumalanga.

In Grade 6, the national average performance in Languages is 28%. For Mathematics, the average performance is 30%. Provincial performance in these two areas ranges between 20% and 41%, the highest being the Western Cape, and the lowest being Mpumalanga.

In terms of the different levels of performance, in Grade 3, 47% of learners achieved above 35% in Literacy, and 34% of learners achieved above 35% in numeracy.

In the case of Grade 6, 30% of learners achieved above 35% in Languages, and 31% of learners achieved above 35% in Mathematics.

This performance is something that we expected given the poor performance of South African learners in recent international and local assessments. But now we have our own benchmarks against which we can set targets and move forward.

Conclusion

Together we must ensure that schools work and that quality teaching and learning takes place.

We must ensure that our children attend school every day, learn how to read and write, count and calculate, reason and debate.

Working together we can do more to create a delivery-driven quality basic education system. Only this way can we bring within reach the overarching goal of an improved quality of basic education.

Improving the quality of basic education, broadening access, achieving equity in the best interest of all children are preconditions for realising South Africa’s human resources development goals and a better life for all.

I thank you.

Read the full Statement and some reactions to this statement:

Statement by the Western Cape Education Department

News report by the Mail and Guardian Online

Statement by the largest teacher union SADTU

Statement by the official opposition

Friday, June 24, 2011

Weekly Funny: Pie Charts

Pie charts are sometimes the most effective way to represent data, but sometimes, they are really useless. Dog house diaries provide an example of the latter

Thursday, June 23, 2011

Launch: June 2011 Report on the Progress In Implementing The APRM In South Africa

| Progress in implementing the APRM in South Africa Details: | ||

| Where: | Pan African Parliament - Midrand | |

| Date: | Tuesday 28 Jun 2011 -Tuesday 28 Jun 2011 | |

| Time: | 10:00 -13:00 | |

| Event description: | ||

| The South African Institute of International Affairs (SAIIA), the Centre for Policy Studies (CPS) and the African Governance Monitoring and Advocacy Project (AfriMAP) will launch the South African APRM Monitoring Project (AMP) Report on Tuesday 28 June 2011 at the Pan African Parliament, 19 Richards Drive, Gallagher Estate, Midrand, commencing at 10:00am. The report, entitled Progress in implementing the APRM in South Africa, is the first attempt to gauge the views and opinions of civil society about the APRM and its progress in this country, while measuring the commitment levels of the government of South Africa in implementing its National Programme of Action in critical areas such as justice sector reforms; crime; corruption; political participation; public service delivery; press freedom; managing diversity; deepening democracy and overall governance, amongst other issues. The report is a culmination of a year-long collective effort among CSOs to jointly assess and analyse governance in South Africa. It finds that progress has been admirable in a few areas, but slow in several others. Details about the event here | ||

ICT in Education: The Threat of Implementation Failure

I am evaluating a few projects looking at the application of ICTs in Education.

Although my job is to measure the learning outcomes of the projects, it seems that implementation failure is a very real risk. Projects break down even before they can be logically expected to make a difference in learning outcomes. Infrastructure problems and limited skills are some of the big threats. It seems that my projects aren't the only ones dealing with these kind of implementation challenges.

Greta Björk Gudmundsdottir wrote an interesting article in the open access journal: Internationl Journal of Education And Development: Using Information and Communication Technology. The article is titled:From digital divide to digital equity: Learners’ ICT competence in four primary schools in Cape Town, South Africa. It speaks to specifically computer skills which would be necessary for ICT solutions. She says:

Although my job is to measure the learning outcomes of the projects, it seems that implementation failure is a very real risk. Projects break down even before they can be logically expected to make a difference in learning outcomes. Infrastructure problems and limited skills are some of the big threats. It seems that my projects aren't the only ones dealing with these kind of implementation challenges.

Greta Björk Gudmundsdottir wrote an interesting article in the open access journal: Internationl Journal of Education And Development: Using Information and Communication Technology. The article is titled:From digital divide to digital equity: Learners’ ICT competence in four primary schools in Cape Town, South Africa. It speaks to specifically computer skills which would be necessary for ICT solutions. She says:

The potential of Information Communication Technologies (ICT) to enhance curriculum delivery can only be realised when the technologies have been well-appropriated in the school. This belief has led to an increase in government- or donor-funded projects aimed at providing ICTs to schools in disadvantaged communities. Previous research shows that even in cases where the technology is provided, educators are not effectively integrating such technologies in their pedagogical practices. This study aims at investigating the factors that affect the integration of ICTs in teaching and learning. The focus of this paper is on the domestication of ICTs in schools serving the disadvantaged communities in a developing country context. We employed a qualitative research approach to investigate domestication of ICT in the schools. Data for the study was gathered using in-depth interviews. Participants were drawn from randomly sampled schools in disadvantaged communities in the Western Cape. Results show that even though schools and educators appreciate the benefits of ICTs in their teaching and even though they are willing to adopt the technology, there are a number of factors that impede the integration of ICTs in teaching and learning.It would make sense to build teachers' and learners' skills to work with ICT while they are required to use ICT for learning, but this may require that the projects deliberately look at ICT skills building as part of delivering the learning solutions.

Monday, June 20, 2011

How Many Days Does it Take for Respondents to Respond to Your Survey?

At my consultancy we use SurveyMonkey for all our online survey needs. It is simple to use, reliable, and they are very responsive.

Their research and found that

The findings suggest that, under most circumstances, it would be best to wait at least seven days before starting to analyze survey responses. Sending out a reminder email after a week would probably boost the response rate somewhat.

SurveyMonkey also did some interesting analysis to answer questions like:

How Much Time are Respondents Willing to Spend on Your Survey?

Does Adding One More Question Impact Survey Completion Rate?

Go check it out!

Their research and found that

The majority of responses to surveys using an email collector were gathered in the first few days after email invitations were sent, andThe graph below maps the response rate against time.

•41% of responses were collected within 1 day

•66% of responses were collected within 3 days

•80% of responses were collected within 7 days

The findings suggest that, under most circumstances, it would be best to wait at least seven days before starting to analyze survey responses. Sending out a reminder email after a week would probably boost the response rate somewhat.

SurveyMonkey also did some interesting analysis to answer questions like:

How Much Time are Respondents Willing to Spend on Your Survey?

Does Adding One More Question Impact Survey Completion Rate?

Go check it out!

Wednesday, June 15, 2011

Weekly Funny and Free Resources

Today is Wednesday, but it is the end of my work week, hence the "funny" posted today. Tomorrow, 16 June, is youth day and since I am classified as youth (i.e. under 35 years of age) by the SA government, I am taking my youthful self to a destination slightly South and West of Pretoria for a couple of days. I will celebrate my freedom and remember those who sacrificed much. I will also watch the sun set over the sea, and eat lobster... and fish, and prawns...

Below is an illustration of how many "black box evaluations" are developed.It comes from a website dedicated to theory of change tools. Check it out!

Below is an illustration of how many "black box evaluations" are developed.It comes from a website dedicated to theory of change tools. Check it out!

Monday, June 13, 2011

The DBE's Annual National Assessments

The Department of Basic Education has started the implementation of the Annual National Assessments.

The biggest advantage of implementing the ANA, is that it supplements the information about education outcomes and quality currently in place in the Education SystemIn the DBE notice to all parents, the purpose of the ANA was explained as follow:

1) Teachers will use the individual results to inform their lessons plans and to give them a clear picture of where each individual child needs more attention, helping to build a more solid foundation for future learning. 2) The ANA will assist the Department to identify where the short comings are and intervene if a particular class or school does not perform to the national levels

It is unlikely that a single short test, administered at the beginning of each school year, will be more effective at providing feedback to teachers about the individual needs of learners, than the current assessments mandated by the DBE’s assessment policies. Continuous assessment policies already require teachers to test learners for this purpose, and if this information has not been used up and ‘til now, it is unlikely that instituting another assessment will make an impact in the school system. Rapid assessments have been shown[1] to be a very cost effective strategy for learner performance, but this requires frequent assessments and teachers with the capacity to analyse and use the results.

Assessments like these have been shown to be a useful accountability tool, depending on how the results are used[2] . It is unclear at this stage how exactly schools and teachers will be held accountable. The results will be shared with parents – which may or may not start a process where parents become more informed and involved in school quality issues. But, these results will have to be interpreted very carefully. A great teacher might produce poor literacy results because the learners in the school only started speaking the language of learning and teaching a year before. This is not a fault of the teacher… yet it might be very tempting to use it as a tool for blame. On the other hand, if the learner results show that there is a problem with a specific teacher or a specific school – How exactly will the DBE intervene? Will they have the support and the necessary information to intervene positively? Is it fair to only target maths and language teachers for “intervention” if poor numeracy and literacy results are found? Certainly, it will not benefit the Education system if the ANAs serve to antagonise the educators.

The biggest advantage of implementing the ANA, is that it supplements the information about education outcomes and quality currently in place in the Education SystemIn the DBE notice to all parents, the purpose of the ANA was explained as follow:

1) Teachers will use the individual results to inform their lessons plans and to give them a clear picture of where each individual child needs more attention, helping to build a more solid foundation for future learning. 2) The ANA will assist the Department to identify where the short comings are and intervene if a particular class or school does not perform to the national levels

It is unlikely that a single short test, administered at the beginning of each school year, will be more effective at providing feedback to teachers about the individual needs of learners, than the current assessments mandated by the DBE’s assessment policies. Continuous assessment policies already require teachers to test learners for this purpose, and if this information has not been used up and ‘til now, it is unlikely that instituting another assessment will make an impact in the school system. Rapid assessments have been shown[1] to be a very cost effective strategy for learner performance, but this requires frequent assessments and teachers with the capacity to analyse and use the results.

Assessments like these have been shown to be a useful accountability tool, depending on how the results are used[2] . It is unclear at this stage how exactly schools and teachers will be held accountable. The results will be shared with parents – which may or may not start a process where parents become more informed and involved in school quality issues. But, these results will have to be interpreted very carefully. A great teacher might produce poor literacy results because the learners in the school only started speaking the language of learning and teaching a year before. This is not a fault of the teacher… yet it might be very tempting to use it as a tool for blame. On the other hand, if the learner results show that there is a problem with a specific teacher or a specific school – How exactly will the DBE intervene? Will they have the support and the necessary information to intervene positively? Is it fair to only target maths and language teachers for “intervention” if poor numeracy and literacy results are found? Certainly, it will not benefit the Education system if the ANAs serve to antagonise the educators.

[1] Yeh, S.S. (2011). The Cost-Effectiveness of 22 Approaches for Raising Student Achievement. Information Age Publishing.

[2] Bruns, B.; Filmer D. and Patrinos, H.A. (2011). Making Schools Work. New Evidence on Accountability Reforms. Washington D.C, World Bank. Accessed online on 13 June 2011 at http://siteresources.worldbank.org/EDUCATION/Resources/278200-1298568319076/makingschoolswork.pdf

Friday, June 10, 2011

The weekly funny: Calculate the probability of a polar bear stealing your car

(Click on pic for a larger image)

Or... if you are not into bears or thieves, calculate the probability of these three intersecting:

Some people have faulty logic, some have statistical skills, and some have no life.

Or... if you are not into bears or thieves, calculate the probability of these three intersecting:

Some people have faulty logic, some have statistical skills, and some have no life.

Thursday, June 09, 2011

The theory behind Sensemaker

Yesterday I posted about Sensemaker. A discussion on the SAMEA listserve ensued. Kevin Kelly posted this:

The software (sense maker) is founded on a conceptual framework grounded in the work of Cognitive Edge (David Snowden). The software is very innovative, but not something that one can simply upload and start using. One really needs to grasp the conceptual background first. It should also be noted that the undergirding conceptual framework (Cynefin) is not specifically oriented to evaluation practice, and is developed more as a set of organisational and information management practices. I am hoping to run a one-day workshop at the SAMEA conference which looks at the use of complexity and systems concepts, and which will outline the Cynefin framework and explore its relevance and value for M&E.

In case someone else is interested in reading up about specifically cynefin and more general complexity concepts I share some resource (with descriptions from publisher's websites)

- Bob Williams and Hummelbrunner (Authors of the book Systems Concepts in Action: A practitioner’s Toolkit ) presented a work session at the November 2010 AEA conference where he introduced some systems tools as it relates to the evaluator’s practice

Systems Concepts in Action: A Practitioner's Toolkit explores the application of systems ideas to investigate, evaluate, and intervene in complex and messy situations. The text serves as a field guide, with each chapter representing a method for describing and analyzing; learning about; or changing and managing a challenge or set of problems. The book is the first to cover in detail such a wide range of methods from so many different parts of the systems field. The book's Introduction gives an overview of systems thinking, its origins, and its major subfields. In addition, the introductory text to each of the book's three parts provides background information on the selected methods. Systems Concepts in Action may serve as a workbook, offering a selection of tools that readers can use immediately. The approaches presented can also be investigated more profoundly, using the recommended readings provided. While these methods are not intended to serve as "recipes," they do serve as a menu of options from which to choose. Readers are invited to combine these instruments in a creative manner in order to assemble a mix that is appropriate for their own strategic needs.

- Another good reference about Systems concepts I found was Johnny Morrell’s Book – Evaluation in the Face of Uncertainty.

Unexpected events during an evaluation all too often send evaluators into crisis mode. This insightful book provides a systematic framework for diagnosing, anticipating, accommodating, and reining in costs of evaluation surprises. The result is evaluation that is better from a methodological point of view, and more responsive to stakeholders. Jonathan A. Morell identifies the types of surprises that arise at different stages of a program's life cycle and that may affect different aspects of the evaluation, from stakeholder relationships to data quality, methodology, funding, deadlines, information use, and program outcomes. His analysis draws on 18 concise cases from well-known researchers in a variety of evaluation settings. Morell offers guidelines for responding effectively to surprises and for determining the risks and benefits of potential solutions.

Unexpected events during an evaluation all too often send evaluators into crisis mode. This insightful book provides a systematic framework for diagnosing, anticipating, accommodating, and reining in costs of evaluation surprises. The result is evaluation that is better from a methodological point of view, and more responsive to stakeholders. Jonathan A. Morell identifies the types of surprises that arise at different stages of a program's life cycle and that may affect different aspects of the evaluation, from stakeholder relationships to data quality, methodology, funding, deadlines, information use, and program outcomes. His analysis draws on 18 concise cases from well-known researchers in a variety of evaluation settings. Morell offers guidelines for responding effectively to surprises and for determining the risks and benefits of potential solutions. His description about the book is here

- And then Patton’s latest text (Developmental Evaluation – Applying Complexity Concepts to Enhance Innovation) also touches on complexity issues and Cynefin .

Developmental evaluation (DE) offers a powerful approach to monitoring and supporting social innovations by working in partnership with program decision makers. In this book, eminent authority Michael Quinn Patton shows how to conduct evaluations within a DE framework. Patton draws on insights about complex dynamic systems, uncertainty, nonlinearity, and emergence. He illustrates how DE can be used for a range of purposes: ongoing program development, adapting effective principles of practice to local contexts, generating innovations and taking them to scale, and facilitating rapid response in crisis situations. Students and practicing evaluators will appreciate the book's extensive case examples and stories, cartoons, clear writing style, "closer look" sidebars, and summary tables. Provided is essential guidance for making evaluations useful, practical, and credible in support of social change.

- Rogers also published a nice article in 2008 in the Journal Evaluation about this

This article proposes ways to use programme theory for evaluating aspects of programmes that are complicated or complex. It argues that there are useful distinctions to be drawn between aspects that are complicated and those that are complex, and provides examples of programme theory evaluations that have usefully represented and address both of these. While complexity has been defined in varied ways in previous discussions of evaluation theory and practice, this article draws on Glouberman and Zimmerman's conceptualization of the differences between what is complicated (multiple components) and what is complex (emergent). Complicated programme theory may be used to represent interventions with multiple components, multiple agencies, multiple simultaneous causal strands and/or multiple alternative causal strands. Complex programme theory may be used to represent recursive causality (with reinforcing loops), disproportionate relationships (where at critical levels, a small change can make a big difference — a `tipping point') and emergent outcomes.

For more resources, try AEA 365

Wednesday, June 08, 2011

Sense Maker

In a previous post, I ventured that we should start questioning the archaic. Our methods and our ways of communicating results haven't changed much over the past 10 years or so, despite new technologies and preferences.

I have posted a number of examples of interesting data visualizations, but the clip below introduces a new way of collecting information, with the help of a product called Sensemaker.

Here Irene Guijt talks about Sensemaker in the context of Evaluation.

Here is an article in the Stanford Social Innovation Review about a real life application done by Global Giving.

I have posted a number of examples of interesting data visualizations, but the clip below introduces a new way of collecting information, with the help of a product called Sensemaker.

Here Irene Guijt talks about Sensemaker in the context of Evaluation.

Here is an article in the Stanford Social Innovation Review about a real life application done by Global Giving.

Monday, June 06, 2011

Data Visualization: Museum of Me

Presentation of information is important to anyone that wants to make an impact with what they say. Intel dreamed up another interesting way of presenting different kinds of information.

If you have a facebook profile (and you don't mind intel punting their product a bit), why not take a walk in your own museum of you? The "Museum of Me" compiles all your Facebook information and creates a three-minute long expose about you. It could be scary... In the same way as listening to your own recorded voice could be scary. Gizmodo says that this is a reminder why you should'nt be using facebook!

If you have a facebook profile (and you don't mind intel punting their product a bit), why not take a walk in your own museum of you? The "Museum of Me" compiles all your Facebook information and creates a three-minute long expose about you. It could be scary... In the same way as listening to your own recorded voice could be scary. Gizmodo says that this is a reminder why you should'nt be using facebook!

Friday, June 03, 2011

Survey answers when you ask people to state the obvious

You run a survey and you ask two questions which should have fairly straightforward answers:

Question 1: Are you Male / Female?

Question 2: What Colour is this?

The following comic from doghouse diaries, and the results of an actual colour survey at xkcd tells you a little about the validity of surveys...

Thursday, June 02, 2011

Evaluation Tasks

I found this graphical representation of Evaluation Tasks from Better Evaluation very useful for thinking about the evaluation process.

(Click on the pic for a larger version).

(Click on the pic for a larger version).

In my experience the "synthesize findings across evaluations"-bit gets neglected. In my work as an evaluator contracted to many corporate donors, I am usually required to submit an evaluation report for use by the client. I often have to sign a confidentiality agreement that prohibits me from doing any formal synthesis and sharing, even if I am doing similar work for different clients. Informally, I do share from my experience, but the communication is based on my anecdotal retellings of evidence that has been integrated in a very patchy manner.I try to push and prod clients into talking to each other about common issues, but this rarely results in a formal synthesis.

It is not always feasible for the clients who commission evaluations to do this kind of synthesis. Their in-house evaluation capacity rarely includes the meta-analysis skill, and even if they contract a consultant to conduct a meta-analysis based on a variety of their own evaluations, there are some problems: Aggregating findings from a range of evaluations that do not pay attention to the possibility that a meta-analysis will be done somewhere in the future, requires a bit of a “fruit-salad approach” where apples and oranges, and even some peas and radishes, are thrown together. Another obvious problem is that donors who do not care to share the good, bad and ugly of their programs with the entire world, would be hesitant to make their evaluations available for a meta-analysis conducted by another donor.

Perhaps we require a “harmonization” effort among the corporate donors working in the same area?

Wednesday, June 01, 2011

Dunning-Kruger Effect and Evaluation

Justin Kruger and David Dunning published a paper in the Journal of Personality and Social Psychology (1999, Vol 77, No.6, 1121 -1134) and the term "Dunning Kruger effect" was coined. This is the abstract:

Errol Morris described how the following sad story about a guy called McArthur Wheeler, inspired Dunning's scientific inquiry:

Alternatively you might want to read an earlier post I did about it here.

People tend to hold overly favorable views of their abilities in many social and intellectual domains. The authors suggest that this overestimation occurs, in part, because people who are unskilled in these domains suffer a dual burden: Not only do these people reach erroneous conclusions and make unfortunate choices, but their incompetence robs them of the metacognitive ability to realize it. Across 4 studies, the authors found that participants scoring in the bottom quartile on tests of humor, grammar, and logic grossly overestimated their test performance and ability. Although their test scores put them in the 12th percentile, they estimated themselves to be in the 62nd. Several analyses linked this miscalibration to deficits in metacognitive skill, or the capacity to distinguish accuracy from error. Paradoxically, improving the skills of participants, and thus increasing their metacognitive competence, helped them recognize the limitations of their abilities.

Errol Morris described how the following sad story about a guy called McArthur Wheeler, inspired Dunning's scientific inquiry:

Wheeler had walked into two Pittsburgh banks and attempted to rob them in broad daylight. What made the case peculiar is that he made no visible attempt at disguise. The surveillance tapes were key to his arrest. There he is with a gun, standing in front of a teller demanding money. Yet, when arrested, Wheeler was completely disbelieving. “But I wore the juice,” he said. Apparently, he was under the deeply misguided impression that rubbing one’s face with lemon juice rendered it invisible to video cameras. If Wheeler was too stupid to be a bank robber, perhaps he was also too stupid to know that he was too stupid to be a bank robber — that is, his stupidity protected him from an awareness of his own stupidity.What does this have to do with evaluators? All I suggest is that you should think a little about the Dunning-Kruger effect next time you ask people to rate their own competence level in a survey. You would not want to design such a survey without knowing that it is not a very smart thing to do, right?

Alternatively you might want to read an earlier post I did about it here.

Subscribe to:

Posts (Atom)