This blog is intended as a home to some musings about M&E, the challenges that I face as an evaluator and the work that I do in the field of M&E.Often times what I post here is in response to a particularly thought-provoking conversation or piece of reading. This is my space to "Pause and Reflect".

Wednesday, January 31, 2007

Monitoring without Indicators - Most Significant Change

Check it out at:

http://www.mande.co.uk/docs/MSCGuide.htm. and http://www.mande.co.uk/MSC.htm

The guide (Prepared by Rick Davies and Jess Dart) explains the MSC technique as follows:

"The most significant change (MSC) technique is a form of participatory monitoring and evaluation. It is participatory because many project stakeholders are involved both in deciding the sorts of change to be recorded and in analysing the data. It is a form of monitoring because it occurs throughout the program cycle and provides information to help people manage the program. It contributes to evaluation because it provides data on impact and outcomes that can be used to help assess the performance of the program as a whole.

Essentially, the process involves the collection of significant change (SC) stories emanating from the field level, and the systematic selection of the most significant of these stories by panels of designated stakeholders or staff. The designated staff and stakeholders are initially involved by ‘searching’ for project impact. Once changes have been captured, various people sit down together, read the stories aloud and have regular and often in-depth discussions about the value of these reported changes. When the technique is implemented successfully, whole teams of people begin to focus their attention on program impact."

Certainly this looks like a very promising technique!

Tuesday, January 30, 2007

Report Back: Making Evaluation Our Own

A special stream was held on making Evaluation our own at the AfrEA conference. After the conference a small committee of African volunteers worked to capture some of the key points of the discussion. Thanks to Mine Pabari from Kenya for forwarding a copy!

What do you think of this?

Making Evaluation Our Own: Strengthening the Foundations for Africa-Rooted and

Overview & Recommendations to AfrEA

Discussion Overview

On 18 January 2007 a special stream was held to discuss the topic

Making Evaluation our own: Strengthening the Foundations for Africa-Rooted and Africa-Led M&E. It was designed to bring African and other international experiences in evaluation and in development evaluation to help stimulate debate on how M&E , which has generally been imposed from outside, can become

The introductory session aimed to set the scene for the discussion by considering i) What the African evaluation challenges are (Zenda Ofir) ii) The Trends Shaping M&E in the Developing World (Robert Piccioto) iii) The African Mosaic and Global Interactions: The Multiple Roles of and Approaches to Evaluation (Michael Patton & Donna Mertens). The last presentations explained, among others, the theoretical underpinnings of evaluation as it is practiced in the world today.

The next session briefly touched on some of the current evaluation methodologies used internationally in order to highlight the variety of methods that exist. It also stimulated debate over the controversial initiative on impact evaluation launched by the Center for Global Development in

The final session aimed to consider some possibilities for developing an evaluation culture rooted in

Key issues emerging from the presentations and discussion formed the basis for the motions presented below:

- Currently much of the evaluation practice in Africa is based on external values and contexts, is donor driven and the accountability mechanisms tend to be directed towards recipients of aid rather than both recipients and the providers of aim

- For evaluation to have a greater contribution to development in Africa it needs to address challenges including those related to country ownership; the macro-micro disconnect; attribution; ethics and values; and power-relations.

- A variety of methods and approaches are available and valuable to contributing to frame our questions and methods of collecting evidence. However, we first need to reexamine our own preconceived assumptions; underpinning values, paradigms (e.g. transformative v/s pragmatic); what is acknowledged as being evidence; and by whom before we can select any particular methodology/

approach.

The lively discussion that ensued led towards the appointment of a small group of African evaluators to note down suggested actions that AfrEA could spearhead in order to fill the gap related to Africa-Rooted and Africa-Led M&E.

The stream acknowledges and extends its gratitude to the presenters for contributing their time to share their experiences and wealth of knowledge. Also, many thanks to NORAD for its contribution to the stream; and the generous offer to support an evaluation that may be used as a test case for an African-rooted approach – an important opportunity to contribute to evaluation in Africa.

In particular, the stream also extends much gratitude to Zenda Ofir and Dr. Sully Gariba for their enormous effort and dedication to ensure that AfrEA had the opportunity to discuss this important topic with the support of highly skilled and knowledgeable evaluation professionals.

Motions

In order for evaluation to contribute more meaningfully to development in

§ African evaluation standards and practices should be based on African values & world views

§ The existing body of knowledge on African values & worldviews should be central to guiding and shaping evaluation in

§ There is a need to foster and develop the intellectual leadership and capacity within

We therefore recommend the following for consideration by AfrEA:

o AfrEA guides and supports the development of African guidelines to operationalize the African evaluation standards and; in doing so, ensure that both the standards and operational guidelines are based on the existing body of knowledge on African values & worldviews

o AfrEA works with its networks to support and develop institutions, such as Universities, to enable them to establish evaluation as a profession and meta discipline within

o AfrEA identifies mechanisms in which African evaluation practitioners can be mentored and supported by experienced African evaluation professionals

o AfrEA engages with funding agencies to explore opportunities for developing and adopting evaluation methodologies and practices that are based on African values and worldviews and advocate for their inclusion in future evaluations

o AfrEA encourages and supports knowledge generated from evaluation practice within

§ Supporting the inclusion of peer reviewed publications on African evaluation in international journals on evaluation (for example, the publication of a special issue on African evaluation)

§ The development of scholarly publications specifically related to evaluation theories and practices in

Contributors

§ Benita van Wyk –

§ Bagele Chlisa –

§ Abigail Abandoh-Sam –

§ Albert Eneas Gakusi – AfDB

§ Ngegne Mbao –

§ Mine Pabari -

More Evaluation Checklists

Last week I put a reference to the UFE Check-list on my blog, and today I received a very useful link with all kinds of other evaluation check-lists on the AfrEA listserv . Try it out at:

http://www.wmich.edu/evalctr/checklists/checklistmenu.htm

It has Check-lists for

*Evaluation Management

*Evaluation Models

*Evaluation Values & Criteria

* Check-lists are useful for practitioners because it helps you to develop and test your methodology with view of improving it for the future.

* They are useful for those who commission evaluations because it reminds you what should be taken into account at all stages of the evaluation process.

* I think, however, that check-lists like these can be particularly powerful if they become institutionalised in practice - If an organisation requires the check-list to be considered as part of a day-to-day business process.

Monday, January 29, 2007

Making Evaluation our Own

I found it particularly useful because it became patently obvious that there are African world views and African methods of knowing that are not yet exploited for Evaluation in Africa. This of course brings the whole debate about "African" Evaluation theories to bear, and asks which kinds of evaluation theories are currently influencing our practice as evaluators in Africa.

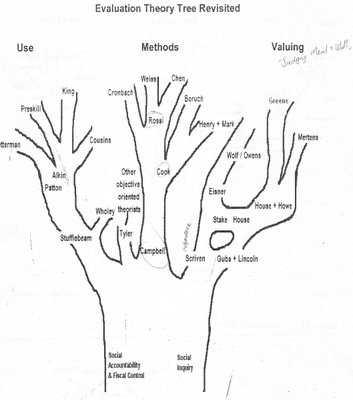

Marvin C. Alkin and Christina A. Christie developed what they call the EVALUATION THEORY TREE. It splits the prominent (North-American) evaluation theorists into three big branches: Theories that focus on the use of evaluation, theories that focus on the methods of evaluation and theories that focus on how we value when evaluating. You can find more information about this at http://www.sagepub.com/upm-data/5074_Alkin_Chapter_2.pdf

The second tree is a slightly updated version. It was interesting to note that most of my reading about evaluation has been on "Methods" and "Use".

I think that if we are serious about developing our own African evaluation theories, we might need to develop our own African tree. Bob Piccioto mentioned that the African tree might use the branches of the above tree as roots, and grow its own unique branches.

A small commission from the conference put together a call for Action that outlines some key steps that should be taken if we hope to make progress soon. Hopefully I can post this at a later stage.

Keep well!

Thursday, January 25, 2007

UFE & The difference between Evaluation and Research

Have A good day!

PS. I hope to post some more of my thoughts on the AfrEA conference over the next month or so!

Thursday, January 04, 2007

IOCE

http://www.ioce.net/resources/reports.shtml

Very very usable Evaluation Journal - AJE

I’ve just paged through the December 2006 issue of the American Journal of Evaluation, and once again I am impressed.

It is such a usable journal for practitioners like myself, whilst still balancing it with the academic requirements that a journal should have. They do this by including

- Articles – That deal with topics applicable to the broad field of program evaluation

- Forum Pieces – A section were people get to present opinions and professional judgments relating to the philosophical, ethical and practical dilemmas of our profession.

- Exemplars - Interviews with practitioners whose work can demonstrate in a specific evaluation study, the application of different models, theories and priciples described in evaluation literature.

- Historical Record - Important turning points within the profession is analyzed, or historically significant evaluation works are discussed.

- Method Notes – Which includes shorter papers describing methods and techniques that can improve evaluation practice.

- Book Reviews – Recent books applicable to the broad field of program evaluation are reviewed.

I receive this journal as part of my membership to the American Evaluation Association – at a fraction of the costs that buying the publication on its own would have.

Go ahead – try it out – Here is a link to its archive: